Perceptual hashing

Visual similarity hashes in Ruby

Hashing is a common technique to take an input, apply a function and get an output.

Arguably the most popular hashing function is still md5

md5 -s "Powerman5000"

# 05e8c3dfc925df18c0b65f20a714c805The particularity of this is that small differences between the input would yield different results.

md5 -s "powerman5000"

# 089ab1a8a649e1ff31b72e96b200bde1The hashes being different also mean that the vectors they represent are very different.

Of course the same concept applies when we create an md5 hash of an image.

Let's take this image for example:

A 1000px width version of this photo yields this hash:

curl -s "https://images.unsplash.com/photo-1503058474900-cb76710f9cd1?crop=entropy&cs=tinysrgb&fit=max&fm=jpg&ixid=M3wxMTc3M3wwfDF8c2VhcmNofDI0fHxuZW9ufGVufDB8fHx8MTc1ODMwNzQ0Mnww&ixlib=rb-4.1.0&q=80&w=1000" | md5sum

# d3cff71ce9d89d5efc1e55d611cd9d7dWhile a 500x version yields this:

curl -s "https://images.unsplash.com/photo-1503058474900-cb76710f9cd1?crop=entropy&cs=tinysrgb&fit=max&fm=jpg&ixid=M3wxMTc3M3wwfDF8c2VhcmNofDI0fHxuZW9ufGVufDB8fHx8MTc1ODMwNzQ0Mnww&ixlib=rb-4.1.0&q=80&w=500" | md5sum

# 86721c719f12ebc8706d4399bc2ab74fSo the exact same version of the photo generates hashes d3cff71ce9d89d5efc1e55d611cd9d7d and 86721c719f12ebc8706d4399bc2ab74f while their contents are pretty much the same (sans compression and literally having less pixels)

MD5 compare

I've been obsessed with perceptual hashing for many years. The concept of extracting features of something and get a similarity distance is something that I find enticing.

The whole idea consists that two things that are visually similar will return similar hashes. Even the same depending on the conditions.

PH

Just because why not I created github.com/elcuervo/ph which is a pure Ruby implementation of perceptual hashing. What I wanted with this is a zero dependency implementation so the concepts are easy to understand.

Hamming

On the same line github.com/elcuervo/hamming follows the same principle but to calculate the hamming distance between two hashes or vectors.

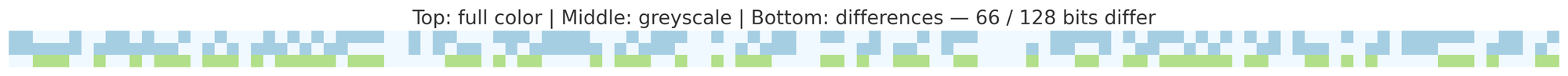

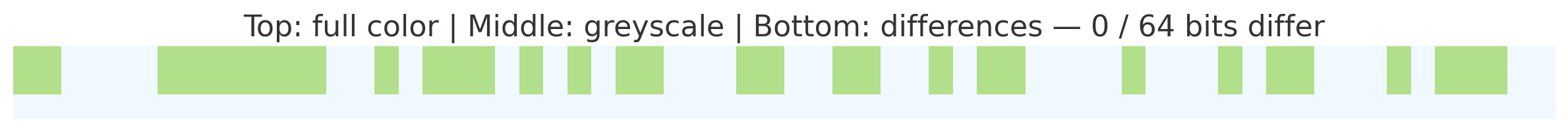

Now let’s repeat the same example with the previous photo. Instead of using the a resized image we’ll also modify the colors:

Our full color image with a 500px size give us the md5 hash: c4d2455e58ef1725991703ebaaa25f99 but our perceptual c3f9756332c22c5c and the second one a md5 hash of fdfcaeb0565fae0b83640b2651b8dc3a but perceptual one is c3f9756332c22c5c which are the exact same hash even if they are conceptually different photos.

MD5 compare

Perceptual hash compare

This is due to the fact that perceptual hashes (in this implementation) convert the image to greyscale to generate their respective vectors.

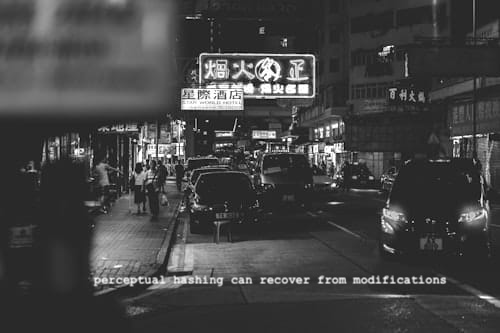

But how about this one?

This photo is not only in greyscale but also its pixels are modified. But it give us a perceptual hash of: c3f9756332c22ccc

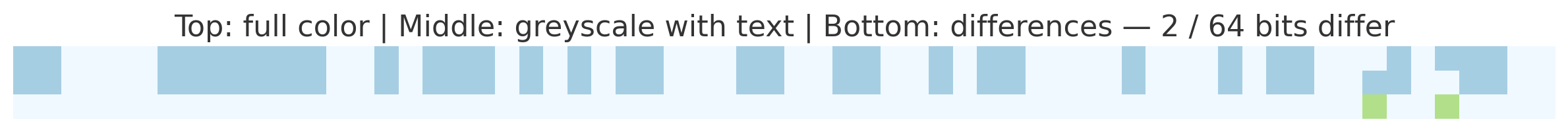

Perceptual Hash compare

As we can see even if they are fundamentally different things and even with modifications the distance between the two images is of 2 bits. By default the implementation uses 64 bits but it can use more to generate more dense hashes that include more information.

The whole objective is to be able to do the calculations and measure distances in the purest Ruby possible.

This algorithm is very simple to understand and allows it to be implemented in different languages.

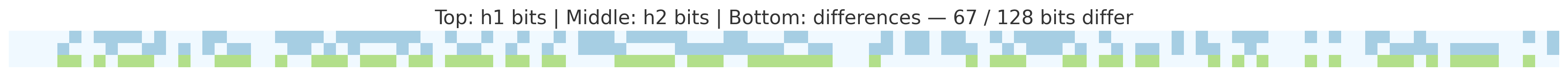

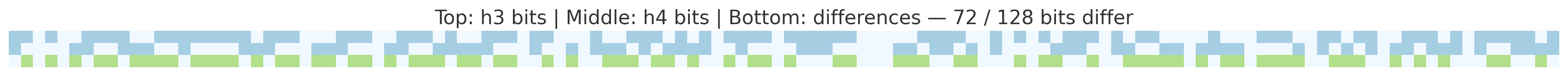

Visualization

This is a simple visualization that takes an image and creates how the hash is "seen" by the algorithm:

Conclusion

In next posts I’ll explore ways to index and search this hashes.